The best way to start

with that is to compare it to traditional IT computing. Where on-premises on

our own networks, we would at some point have a capital investment in

hardware. So think of things like having a server room constructed, getting

racks and then populating those racks with equipment. With things like telecom

equipment, routers, switches, servers, storage arrays, and so on.

Then, we have to

account for powering that equipment. We then have to think about HVAC,

heating, ventilation and air conditioning, to make sure that we've got optimal

environmental conditions to maximize the lifetime of our equipment.

Then there's

licensing. We have to license our software. We have to install it, configure

it and maintain it over time, including updates. So with traditional IT

computing, certainly there is quite a large need for an IT staff to take care

of all of our on-premises IT systems.

But with cloud

computing, at least with public cloud computing, we are talking about hosted

IT services. Things like servers and related storage, and databases, and web

apps can all be run on provider equipment

that we don't have to purchase or maintain. So in other words, we only pay for the services that are used.

And another part of the cloud is self-provisioning,

where on-demand, we can provision, for example additional virtual machines or

storage. We can even scale back on it

and that way we're saving money because we're only paying for what we are

using.

With cloud computing,

all of these self-provisioned services need to be available over a network. In the case of public clouds,

that network is the Internet. But

something to watch out for is vendor lock-in. When we start looking at cloud

computing providers, we want to make sure that we've got a provider that won't

lock us into a proprietary file format for instance. If we're creating

documents using some kind of cloud-based software, we want to make sure that

data is portable and that we can move it back on-premises or even to another

provider should that need arise. Then there is responsibility.

This really gets

broken between the cloud provider and the cloud consumer or subscriber,

otherwise called a tenant. So the degree

of responsibility really depends on the specific cloud service that we're

talking about. But bear in mind that there is more responsibility with cloud

computing services when we have more control. So if we need to be able to

control underlying virtual machines, that's fine, but then it's up to us to

manage those virtual machines and to make sure that they're updated. The hardware is the provider's responsibility.

Things like power,

physical data center facilities in which equipment is housed, servers, all

that stuff. The software, depending on what we're talking about, could be

split between the provider's responsibility and the subscriber's

responsibility. For example, the provider might make a cloud-based email app

available, but the subscriber configures it and adds user accounts, and

determines things like how data is stored related to that mail service. Users

and groups would be the subscriber's responsibility when it comes to identity

and access management.

Working with data

and, for example, determining if that data is encrypted when stored in the

cloud, that would be the subscriber's responsibility. Things like data center

security would be the provider's responsibility. Whereas, as we've mentioned,

data security would be the subscriber's responsibility when it comes to things

like data encryption. The network connection however is the subscriber's

responsibility, and it's always a good idea with cloud computing, at least

with public cloud computing, to make sure you've got not one, but at least two

network paths to that cloud provider.

A cloud is defined by resource pooling. So, we've got all this IT

infrastructure pooled together that can be allocated as needed. Rapid elasticity means that we can quickly

provision or de-provision resources as we need. And that's done through an

on-demand self-provisioned portal, usually web-based. Broad network access means that we've got connectivity available

to our cloud services. It's always available. And measured service means that it's metered, much like a utility, in

that we only pay for those resources that we've actually used. So, now we've

talked about some of the basic characteristics of the cloud and defined what

cloud computing is.

For non-techies out

there, the cloud might be an intimidating and nebulous concept. We hear about

cloud computing all the time, but what exactly does it mean?

The National

Institute of Standards and Technology (NIST) describes the basics of cloud

computing this way:

Cloud computing is a model for enabling ubiquitous,

convenient, on-demand network access to a shared pool of configurable

computing resources (e.g., networks, servers, storage, applications, and

services) that can be rapidly provisioned and released with minimal management

effort or service provider interaction.

Still confused?

In short, the cloud

is the Internet, and cloud computing is techspeak that describes software and

services that run through the Internet (or an intranet) rather than on private

servers and hard drives.

Cloud computing is

taking the world by storm. In fact, 94% of workloads and compute instances

will be processed through cloud data centers by 2021, compared to only 6% by

traditional data centers, according to research by Cisco.

So why companies are moving from traditional severs

and datacentres to cloud ?

With

cloud computing, it allows companies to have more time to focus on their

business, instead of on the IT infrastructure. One of the reasons for this is

because most of the IT infrastructure is the responsibility of the cloud

provider.

With the

cloud we have metered usage, whereby all of the usage of cloud resources is

tracked and that's how we get billed. So, what that ends up meaning at the

accounting level is that we're dealing with a monthly recurrent operating

expense versus a capital expenditure. Which might be less frequent, but

nonetheless requires capital for things like the acquisition of hardware to

run on-premises networks. So, it's a monthly ongoing expense which often might

include a subscription plus a usage fee. But also the nice thing about it is

it can facilitate budgeting over time as we set a baseline for our usage of

cloud resources in our environment.

So, you

pay for what you use similar to a utility like electricity or water.

We also

have to bear in mind that the IT needs of an organization to support its

business processes will change over time. Things like the amount of disk space

that's needed, or the underlying server horsepower, maybe to perform Big Data

analytics. We have to think about lost productivity and downtime from failed,

or misconfigured, or even hacked IT systems. Then we have to recognize, in the

cloud we've got the ability to rapidly provision and also deprovision

resources. That can even be automated in some cases. Bear in mind that

deprovisioning, for example scaling in, that would be removing virtual machine

instances to support an app, saves money. Because we have less virtual

machines running in the cloud at the same time.

In the

cloud it's also very easy to enable high availability across different

geographical regions. For example, to make sure our data or database replicas

are highly available. The cloud also has a global reach with points of

presence around the world with Google Cloud Platform. So, if we want to enable

some content to be made available to users in Europe. Even though the web site

that really hosts the content is in North America, we can do that using things

like a cloud content delivery network,

or CDN.

We should

also plan to monitor the network link between our on-premises network and the

cloud. Assuming that we have connectivity from on-premises to the cloud,

whether it's for data center purposes or that's where end users are. We need

to make sure that's done properly because we want to make sure that we have

redundant links to the cloud. That's essentially the single point of failure

between a company that has an on-premises environment as well as a cloud

environment. The Internet links us between them and so, we need to make sure

that it's trustworthy.

Then

there's cloud economics. Consider this

example, where we might have a return on investment, or ROI, of 5 years. Now

that could mean that we have a payback period of 5.5 months. So that our total

cost of ownership, or TCO reduction could potentially be upwards of 64%. Now

these numbers will certainly vary depending on your usage of the cloud. Like

the use of any type of commodity so to speak, it has to be used efficiently.

That doesn't take care of itself in the cloud. We still have to manage it

properly. It still requires proper IT workload and IT system governance.

So, what

are some factors, then, that could feed into some of these potential cost

savings? Well, one is less time spent updating and managing IT resources,

because the bulk of that is the responsibility of the public cloud provider.

Also high availability. We're more likely to have systems left up and running

over the long term if they're hosted in different data centers owned by a

cloud provider than we are on-premises. Then there's the rapid provisioning

and deprovisioning or the scalability of resources. So, if we offer some kind

of retail services online for shopping, maybe during holiday seasons. We have

it configured to auto-scale to add other virtual machine instances to support

the peak demand. But then when that disappears, so do the extra virtual

machine instances. So, we're only paying for the peak demand usage that's

required. And the lessened risk of downtime, besides high availability, is

also something we consider in the SLA, the service level agreement. The

service level agreement is a contractual document between the cloud provider

and the cloud consumer. And one of the many things it will cover is things

like expected uptime, and the consequences of that not being met.

Public Clouds

There are

varying cloud models, one of which is the public cloud. The public cloud is

accessible to all users over the Internet, potentially. Now, of course,

they're going to have to sign up, either initially for a trial account, or

initially or after the trial is expired for a paid account. But nonetheless,

the public cloud is accessible to individuals. So, individual consumers as

well as organizations, government agencies, academic institutions, and so on.

So, this means then that with a public cloud environment, the public cloud

provider has data centers around different geographical locations in the

world. And as a result, they also have multiple cloud tenants or customers. Of

course, each cloud tenant or customer, their configurations, and their data

are kept completely separate from other cloud tenants. Otherwise, nobody would

use the service, whether it be individuals or organizations.

Cloud

provider hardware is owned by the cloud provider and exists in data centers in

different regions around the world physically. So, what we end up having then

is a large scale of pooled resources. Things like servers with a lot of

horsepower that can act as hypervisors and run virtual machine guests and

applications. Things like Docker containers. Also, we have things like pooled

resources in the form of network equipment. That can be configured at a higher

level by cloud tenants. Things like routing paths and firewall rules. Another

example of a pooled resource, of course, would be storage that customers or

tenants can provision at a moment's notice, or deprovision at a moment's

notice, as well. So, the cloud provider, then, benefits from economies of

scale. Due to the large number of cloud tenants or customers, it allows them

to be able to acquire all of these facilities containing all of this physical

computing equipment. And to assure that they meet specific security standards,

so that they are trustworthy, and customers will actually use their service

and pay for it. And so as a result, the economies of scale mean that users can

end up perhaps with a small subscription fee but really, they only pay for

what they are using. And that is in quite a stark contrast to the purchase of

equipment on premises, and licensing in software, where once you've purchased

it it's yours.

Then we

have to consider network connectivity to and from the public cloud. Now,

normally this is done over the Internet. Certainly, for individuals this is

the case and even for most organizations. So, we have access to the public

cloud provider services through the Internet. In the corporate environment,

user devices like mobile smartphones, laptops, desktops will maybe reside on a

corporate on-premises network that connects over the Internet to the cloud.

Now, that can also happen even through a VPN if required, if we need that

encrypted tunnel or if we want to link an on-premises network directly with a

cloud-based network. Now, also, there's the option of a private dedicated

connection.

With

Google Cloud Platform, that's called Google

Dedicated Interconnect. We'll get into the details later. But for now,

essentially, it's a dedicated network link from your on-premises network or

data center to the Google Cloud without going through the Internet. Now, if we

are going to use network connectivity such as the Internet, we should consider

redundant network paths. And ideally, that would be network connections from

different Internet service providers. We don't want a single point of failure.

So that if there's a problem with one Internet service provider, we can fall

back on our Internet link to the Google public cloud to our secondary Internet

service provider network link.

Private Clouds

Besides

the public cloud type, we also have private clouds. Private clouds are

different in that they're accessible only to a single organization. Whereas

public clouds are accessible to anyone that wants to sign up over the

Internet. So, it's organization-owned hardware infrastructure that the

organization is responsible for configuring and maintaining over time.

However, a private cloud still adheres to the exact same cloud characteristics

that a public cloud does such as self-provisioning. Whether it's through some

kind of a web user interface and the rapid elasticity of pooled IT resources,

such as the ability to use a web interface to quickly deploy virtual machines

or additional storage, maybe for use by a particular department within the

organization.

So,

you'll see private clouds in larger enterprises are often used for

departmental chargeback. So, another common characteristic of a cloud is, metered usage. So, all the usage of resources

is tracked and then, in this case, it's charged back to departments that use

those IT resources.

A private

cloud can also be extended into the public cloud. Now, that's where we get

into what's called a hybrid cloud, and

we might use that for something called cloud

bursting. Cloud bursting essentially means that, once we've depleted

our on-premises resources, whether that be the number of virtual machines we

can run, or we've consumed all of our storage capacity on-premises. We've got

a link to the cloud as a secondary plan B that can be used to provision, in

our case, maybe additional virtual machines or additional storage. And in some

cases, it won't even be known to the end user, this is actually happening into

a different environment, into the public cloud.

The

private cloud is sometimes the only real choice we have, because regulations

might prohibit or limit the use of public cloud providers. So, if we're

dealing with some kind of government sensitive data, we might be prohibited

from that leaving a specific network under military control if it's that type

of information.

In a

private cloud, the organization has way more IT responsibility. Now, that

comes in the form of the cost of acquiring all the equipment and paying

personnel to configure it and maintain it over time. But also, fault tolerance

is also the responsibility of the organization. So, the organization is responsible for the installation,

configuration, and maintenance of IT equipment for the private cloud.

Now,

organizations can have internal SLAs or service level agreements that

guarantee uptime, for example, to departments that pay for their usage of

those private cloud resources. And fault tolerance might be achieved by using clustering on-premises. Multiple servers that

offer an app, if a server node goes down, then another one can pick up the

slack and continue running the app.

The

organization, in a private cloud, also has full

control of that environment in how it's configured, how it's maintained

and updated.

And, of

course, there's definitely a further degree of

privacy depending on the configuration. But generally, this is true

because we don't have any data that is leaving the on-premises network. That's not to say that a private cloud is

always more secure than the public cloud. Not necessarily true at all. But

there is more privacy.

Community Clouds

We've

already discussed public and private clouds. Another cloud type is a community

cloud. A community cloud uses cloud provider IT infrastructure and pooled

resources. So, really that's just cloud computing unto itself. However, what

makes them a little bit different than a public or a private cloud is that

there are same needs, or the same requirements exist across multiple tenants.

Now, of course, that means that if the public cloud provider also offers

public cloud computing to anyone over the Internet, that there is isolation

from those public tenants.

In some

cases, a community cloud would be the perfect solution to remain compliant

with certain industry regulations. So, a

community cloud must ensure regulatory compliance.

So, for

example, for regulatory compliance, in terms of security, we might use G Suite

for Government. Now, there also is FedRAMP compliant, where FedRAMP stands for

the Federal Risk and Authorization Management Program. Where US government

departments have to have certain security controls in place. Then on the

medical side there's HIPAA compliance. HIPAA stands for the Health Insurance

Portability and Accountability Act, and again, as usual, it's really about

data governance on the security side. Making sure that data is protected with

reasonable encryption. Whether it be data at rest, that would be stored data,

or data being transmitted by configuring things appropriately like firewalls

and intrusion detection systems. Other possible regulatory compliance items

would deal with things like long term data retention policies and archiving

and making sure that it's immutable. In other words, that something that's

archived cannot then be modified.

Hybrid Clouds

You can

think of a hybrid cloud as really being the best of both worlds. When we talk

about both worlds we're talking about on-premises

computing environments as well as cloud

computing environments working together at the same time. Now this

would be something that's common with public cloud adoption for an

organization. Where we might run a private and public set of IT workloads in

parallel or together as we migrate over time. Now that would mean taking data

or systems or virtual machines. Even converting on-premises

physical servers to virtual machines in the cloud. That physical to

virtual conversion is called P2V. We can

also use a hybrid cloud as a way to extend our private network into the cloud.

And often that would be done for things like cloud bursting to meet peak

demands. So that when we've depleted the IT resources available on-premises,

we simply seamlessly extend into the public cloud to use additional resources

there.

The

migration of our on-premises systems and data can take a long time. So for

example, we might have data that's stored on-premises and in the cloud. And

maybe that is our backup solution, it's an off-site backup. So we might have

an appliance on-premises that allows users to access data. But at the same

time maybe it synchronizes or replicates data to the cloud for backup

purposes. Could also be the other way around. We might also have cloud data

presented to an on-premises appliance, which then presents itself to backup

software on-premises as something like a virtual tape library. So our

on-premises backup software just thinks, oh yeah, it's another regular backup

source.

Now, in

the case of the migration of very large amounts of data into Google Cloud. It

might be unfeasible to think of doing that over and even the highest speed

Internet connections. So instead, we might look at something like the Google Transfer Appliance. And what this

really means is that we've got an on-premises storage appliance that we

essentially copy data to. And that appliance is then physically shipped to a Google Cloud Platform data center where

the data is then copied onto their infrastructure.

Another

possible option to consider when we talk about hybrid clouds is the Google Dedicated Interconnect feature. We'll

focus on its details later. But what it really lets us do is bypass the

Internet by having a private dedicated network

link from our on-premises network directly into the Google Cloud. Now, that

normally means that we have more predictable

throughput than we would by using a shared connection over the Internet. And

one might argue it might offer more security since it's private. Now of

course, over the Internet we could also achieve security by using a VPN tunnel. But Google Dedicated Interconnect

is yet another consideration when we think about hybrid cloud computing.

Service Models in Cloud Computing

- Software as a Service (SaaS)

One type

of cloud computing service model is software as a service, which is otherwise

simply called SaaS. Now, really what this is, is application or office

productivity software that's hosted on provider equipment. And it's accessible

over a network such as the Internet. So normally, it requires only a web

browser. It might require some plugins. But usually, the web browser and a

plugin would be the extent to what's required to access SaaS services. And

often they'll offer some degree of customization. Where you can configure

maybe the visual appearance or skins or certain security settings related to

the specific SaaS tool you're using.

For

example, if we're using office productivity tools like word processors through

a cloud provider. So with software as a service because it is cloud computing,

we pay for the usage of a service. For example, we have Gmail and G Suite,

which are considered software as a service offerings through Google. And in

the case of Gmail, depending on whether it's being used by an individual or a

large enterprise determines on the cost of it, in terms of the storage space

for archived messages. Or the number of messages in and out.

Software

as a service relies on virtualization. And this also means that we've got

tenant isolation. Now the virtualization doesn't necessarily mean virtual

machine operating system virtualization. It could also be application

virtualization using what are called application containers. In other words, no control of underlying virtual

machines, also known as VMs. In the end, as a cloud consumer, we

don't really care as long as the isolation is in place. So for example, if I'm

using a cloud Gmail solution, I want to make sure that my organization's Gmail

accounts are not in any way accessible to other Google cloud tenants. So when

we talk about software as a service, yes, it does rely on virtualization of

some kind at some level. However, that's not visible and certainly its

configuration is not available to us as cloud consumers. That configuration,

including things like making changes or applying patches is the responsibility

of the cloud provider. Software as a service also removes the need for things

like installing software and licensing it. So really, it transfers the IT

management responsibility from our organization to the cloud provider instead.

With

software as a service, one business consideration is vendor lock-in. So we

might take a look at the service level agreements for the various cloud

services we use from a specific cloud provider. Service level agreements are also known as SLAs. Because we

want to make sure that if we decide that we want to stop cloud computing, we

want to go back on-premises or switch to a different public cloud provider,

that we can. And that might mean that we have to ensure that data can be

exported in a standard format from one provider and then imported to another.

Or even live migrated over the Internet.

For example, with Google, we have the option

of actually migrating Amazon Web Services storage data from Amazon Web

Services directly into Google Cloud Storage. We then have to think about our

network connection. Because when we depend on software as a service, that

network connection to the cloud is our single point of failure if we've only

got one network connection. So, there is a

dependency on connecting to the cloud provider. We should also make

sure that we acquire sufficient bandwidth in that connection. And that would

depend upon, for instance, the number of users that would concurrently be

using software as a service through that network link. And again, due to

having a single point of failure, we should really consider having redundant

network paths. In the case of Internet connections, that would be multiple

Internet connections ideally in a perfect world through different Internet

service providers.

- Infrastructure as a Service (IaaS)

Now we

will look into infrastructure as a

service. Just like software as a service is a different type of cloud service

model, well, so is infrastructure as a service and it's normally just called IaaS. It relies on virtualization. For

example, working with virtual machines. Virtual

machines are also known as VMs.When we deploy our virtual machine

instance, that's infrastructure as a service. When we provision new storage in

the cloud, that is infrastructure as a service. So is things like network

configurations, like firewall rule sets. It's all part of our network

infrastructure. Physical equipment that makes all of this possible is actually

housed in Google Cloud data centers around the globe. Now because that

acquisition of hardware, that expense in powering it and cooling it and so on

is done by Google, it’s their responsibility. So for us, the cloud customer,

it reduces capital expenditures on our end. All we do is pay for what we use,

which is really just an operational expense.

Infrastructure

as a service can be provisioned using the Google Cloud console, so using the

web GUI. Or of course it can be provisioned and managed programmatically

through various APIs. Or even using the GCloud command line tool. We provision

and manage infrastructure as a service over the network. And it also supports

scalability, on-demand where we pay for the usage. So if we decide, for

example, we want to provision four new virtual machines, we can do that in a

matter of minutes with a few clicks. That same thing is true if we want to

provision additional storage. And also deprovisioning

resources that we no longer need to save on costs. This might even be

automated. So we might even configure something like a VM instance group in

Google Cloud Platform and configure it with a specific number of instances to

support things like autoscaling. So that

when we have a peak demand in an app, we scale out horizontally. We add more

virtual machines to support the app and when that demand declines, so does the

number of virtual machines we scale back in.

Google Cloud Storage is definitely considered

infrastructure as a service. All of the network configurations are also part

of infrastructure, and this uses something called software defined networking. Now, that's not specific to Google

Cloud computing. It's a standard cloud computing term that's often referred to

as SDN. And what it really means is that

we, the cloud customers, have an easy way of configuring network resources.

Deploying

cloud virtual networks and IP ranges and firewall rules without actually

communicating directly with the underlying physical network hardware that

makes all of that possible. Of course, we would then deploy virtual private clouds or VPCs. In

other words, a VPC is a virtual network in the cloud. These are

simply virtual network configurations that we define in the cloud. And then we

deploy our resources like virtual machine instances into the VPCs. We

mentioned that we have firewall rule sets that can be configured for a VPC or

even specific VM instances that control inbound or outbound network traffic.

So what

is infrastructure as a service normally used for?

So, infrastructure as a service would commonly be

used as follows. Well, often it will be used for testing purposes, because it's so quick and

easy to provision, for instance, virtual machines that are isolated from

others in a VPC. So it's sandboxed, maybe to test an application quickly. Or

to host a web site, whether it's for

private organizational use, or it's a public-facing

web site. We might use infrastructure as a service for cloud storage and/or backup. Even for high

performance computing or HPC, where we can configure clusters for the purpose

of parallel computing, which you might use for things like Big Data analytics.

- Platform as a Service (PaaS)

Platform

as a service is yet another type of cloud computing service model, and it's

often simply called PaaS. Now it's related more to applications, the

development of applications, and then the deployment of them. Whether that's

in a staging or test environment or deploying it into production. So the focus

then is on the application rather than the underlying supporting resources

like network configurations, virtual machines, and storage. Or virtual servers.

Platform

as a service-specific resources would include things like development tools.

Now that would include also APIs that expose cloud functionality to developers

in a variety of different languages. And that's definitely the case with

Google Cloud Platform. Many of the cloud services are available to developers

in languages like C#, Python, Java and so on. Also platform as a service deals

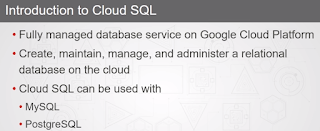

with databases. So if we want to deploy a MySQL database, for example, in the

cloud the underlying complexities like virtual machines, installing the

software and so on, that's already taken care of in most cases. We simply tell

our environment, our GUI environment, or whether we're doing it through the

command line, that we want to deploy some kind of a database in the cloud. A

couple of parameters, such as, like the number of replicas, whether we want to

use our own license, that type of thing. And it just happens very quickly.

Other

types of PaaS resources would include things like business intelligence tools

or Big Data analytical tools. These are essentially like extensions that can

take data that's been massaged through our platform as a service use. And then

we can gain insights from them that otherwise wouldn't readily be apparent.

Some examples of Google Cloud platform as a service offerings would include

MySQL or even using the Docker Container

Registry. Where Docker containers are essentially a way to isolate

applications from one another, like virtual machines can isolate operating

systems from one another.

Disaster Recovery as a Service (DRaaS)

Even

though it doesn't sound like a good idea, planning for failure is a good idea.

It's really related to disaster recovery. In the cloud, we're really talking

about Disaster Recovery as a Service, otherwise called DRaaS. Now with traditional on-premises disaster recovery,

otherwise simply called DR, we have things like off-site backups to protect

data. Failover clustering, so that if we've got a critical app running on a

host that fails, that app can then run on another host within the cluster, and

in some cases, with zero downtime. We then have machine imaging that we can

then use to quickly get a system back up and running in the event of a

disaster. And then on the facility side, we've got power generators in case the

power grid goes down. There are battery-powered lights and so on. Now that's

with a traditional on-premises disaster recovery mechanism.

Now in

the cloud, with Disaster Recovery as a Service or DRaaS, we have a much smaller capital expenditure than traditional

disaster recovery. Actually there's no capital expenditure really because in

the cloud, we have an ongoing operational expense instead. So that means

dealing with things like data backups, maybe from on-premises, to the cloud,

and also long-term data archiving to the cloud. We might even have a hybrid

cloud solution where we extend our on-premises workloads into the Google

Cloud. So often that might be done in the case of disaster recovery so that if

we have a problem with an on-premises IT workload, it can failover into the

cloud as required.

So

Disaster Recovery as a Service then will vary from one public cloud provider

to the next. But generally speaking, it allows us to utilize cloud provider

infrastructure in case we have a failure of some kind with our on-premises

environment.

Summary

The public cloud is available to all over the

Internet. It might require signing up for an account, but potentially anyone

over the Internet, individual or organization, can sign up for public cloud

computing services.

A private cloud is different because it has all

of the cloud characteristics that a public cloud would have. Things like rapid

elasticity, self-provisioning, metered usage and so on. However, it's all

under the control of a single organization. So it's an organization's

infrastructure on-premises. They've paid for it, they're responsible for it,

and they manage it. Hence, it's a private cloud. But take note that just

because you're using virtualization on-premises does not mean you have a

cloud. You have to meet the cloud characteristics of metered usage,

self-provisioning, rapid elasticity, broad access, and so on.

A community cloud means that we've got the

infrastructure available but really for special needs, so organizations that

have similar computing requirements. Often it's usually in the case of

isolation and security, and maybe even certain types of connections or certain

app availability. Those similar computing requirements are best served through

what's called a community cloud.

A hybrid cloud uses on-premises as well as cloud

resources in the public cloud environment. So for example, we might have a VPN

link between our on-premises network or data center and the Google Cloud VPC

or a virtual network in the Google Cloud. We might have on-premises clients

that access Google Cloud virtual machine instances, that might also happen

through that VPN link. Virtual machines

are also known as VMs. Now, in some cases a hybrid cloud could be a

temporary solution during cloud adoption and the migration of data and IT

workloads from on-premises to the cloud. But also a hybrid cloud could also be

a more longer term solution for cloud bursting,

which means that we have depleted our on-premises IT resources and now need to

use public cloud resources. It might also be used for high availability where

we use it as a disaster recovery solution

if we have a failed workload on-premises we might have it configured to fail

over to the cloud.

Software as a service or SaaS is often in the

form of end-user productivity software where the cloud provider is responsible

for the maintenance of this software. The installation, the general

configuration, updating and so on. So in the Google Cloud we might use

software as a service offerings such as G Suite, or even using Gmail.

Infrastructure as a service, or IaaS, is

really based on IT infrastructure components. So that would be things like

virtual machines, or cloud storage, or cloud network configurations such as

VPCs, or IP address ranges we configure for certain VPC subnets, or firewall

configurations, or VPN connections, and so on. So in the case of Google Cloud,

infrastructure as a service would include virtual machine instances, VPCs,

which are virtual networks in the cloud and Google Cloud Storage offering.

Characteristics of cloud computing.

What makes a cloud a cloud? 5 defining

characteristics are,

- Broad network

access

- On-demand

self-service

- Resource

pooling

- Measured

service

- Rapid

elasticity

With

traditional IT computing, we have an on-premises computing environment, which

requires a capital investment in hardware. We then have to power the

equipment. We have to assure that we have proper HVAC, that's Heating,

Ventilation, and Air Conditioning to make sure that equipment runs smoothly.

Then we have to license software. After we acquire the software, it needs to

be installed, configured, and maintained, including upgrades and even updates.

Now in

the cloud, we're talking about hosted IT services on the Google Cloud

Platform, that's on Google's equipment in their data centers around the world.

That includes servers, storage, databases, web apps, and so on. And just like

a utility, such as electricity, with cloud computing we pay for the services

that we use. There's also self-provisioning on demand, where we can either

programmatically or from a command line or using a GUI, we can provision cloud

resources or deprovision them if we need less. Also, the services are all

accessible over a network such as the Internet. But we should always be

careful with cloud computing to avoid vendor lock-in. And we want to make sure

that means that we have the ability to export data to standard formats or to

migrate data to other providers should we have a need in the future.

In the

cloud, there is often shared responsibility of taking care of the IT workload

running in the cloud. But the degree of that responsibility depends on the

specific cloud service that we're talking about. For example, if we're talking

about a hosted database in the cloud, maybe the underlying virtual machine

operating system and updates are taken care of by the cloud provider. So more

responsibility does, however, mean more control. Hardware is the provider's responsibility, whether we're

talking about network routers and switches, storage arrays, physical

hypervisor servers, and so on. The software, in some cases, will be the

provider's responsibility, such as in the case of something like Gmail.

However, the subscriber has some responsibility in terms of using it and

configuring it to serve their business needs. So it's a little bit of both, in

that case, with software.

When it

comes to the creation of users and groups, and in the Google Cloud Platform

that's called IAM, identity access and management, that's the subscriber's

responsibility. It's not Google's responsibility. Just like the creation and

management of data is the subscriber's responsibility. Security, in some

cases, can be the subscriber's responsibility. But the provider also has a

role in that, in making sure that their data centers, the equipment, and the

staff are all falling under the appropriate security recommendations to run a

proper IT cloud environment. The network connection, of course, is the

subscriber's responsibility. And that's why often it pays to have redundant

links, ideally through different Internet service providers, to assure that we

can get to the Google Cloud.

Google

manages the physical data center facilities that house all of the racks of

equipment. So they deal with things like hardware physical security, such as

locking up racks of equipment. They deal with the physical network

infrastructure links and the virtualization infrastructure, which really means

having the hypervisors that can run the virtual machines on them.

The

subscriber has a responsibility with the virtual machine instances that they

might deploy. [Video description begins] Virtual

machine is also referred to as VM. [Video description ends] How

they configure and create policies, such as how to control firewall rules and

also how to control data retention. That is under the subscriber's

responsibility, in this case. Dealing with user credentials, whether for example,

multi-factor authentication is configured, is the subscriber's responsibility.

Such as whether data at rest or data in transit is encrypted. And also what

type of data stores are being used to hold cloud-based storage information.

The cloud

really has a number of characteristics, such as resource pooling, having all

of these resources available for cloud consumers. Broad network access allows

access over the network using any type of device. The network in this case

being the Internet. Cloud computing also has a characteristic of having

on-demand self-provisioning. Usually, that's done through a web interface. So,

for example, if a client requires more storage, they can simply click a few

buttons and it's done very quickly. It's a measured or metered service, and

that's how we pay for our usage of cloud computing. The rapid elasticity is a

result of both resource pooling and self-provisioning, where we can rapidly,

in our example, deploy more storage or even more virtual machines as required.