This blog is going to be a continuation of below article so if you have not seen that please read that and come back here. https://annmaryjoseph.blogspot.com/2018/12/google-cloud-platform-concepts.html

Here I am talking about,

Google Cloud Storage Basics

Here I am talking about,

Google Cloud Storage Basics

Google

Cloud Storage implies virtually unlimited data storage and access globally, and

it's available 24/7.

Why use

Cloud Storage? Well, you could use it to, for example, serve website content,

provide an archive for disaster recovery, as well as for direct downloads of

large data objects.

Google

offers a number of Cloud Storage options, and which one you use depends on the

application and the workload profile.

Cloud

Storage options support structured, unstructured, transactional, as well as

relational data types.

Cloud

Storage solutions are available for a wide array of use cases, including

solutions for mobile applications, for hosting commercial software, for

providing data pipelines, as well as basic backup storage.

Google

Compute Engine is an Infrastructure-as-a-Service offering for provisioning

flexible and self-managed VMs hosted on Google's infrastructure.

Google

Compute Engine (GCE) is the Infrastructure as a Service (IaaS) component of

Google Cloud Platform which is built on the global infrastructure that runs

Google's search engine, Gmail, YouTube and other services. Google Compute

Engine enables users to launch virtual machines (VMs) on demand.

VMs can be

launched from the standard images or custom images created by users. GCE users

must authenticate based on OAuth 2.0 before launching the VMs. Google Compute

Engine can be accessed via the Developer Console, RESTful API or command-line

interface (CLI).

Supported

virtual machine operating systems include a number of Windows-based operating

systems as well as many of the common Linux distributions. Compute Engine

integrates with other Google Cloud Platform services like Cloud Storage, App

Engine, and BigQuery.This extends the service to address more complex

application requirements.

Google

Compute Engine includes predefined machine configurations as well as the

ability to define custom machine types that you could optimize for your

organization's requirements. With Compute Engine, you can run large compute and

batch jobs using preemptible VMs, which are very inexpensive and short-lived

compute instances. Fixed-pricing with no contracts or commitments simplify the

provisioning and shutdown of virtual machines. All data that's written to

persistent disk using Compute Engine is encrypted transparently and then

transmitted and stored in encrypted form. And Google Compute Engine also

complies with a number of data and security certifications. Compute Engine

offers local solid-state drive block storage that is always encrypted. Local solid-state

drives are physically attached to the hosting server supporting very high IOPS

and low latency. With Google Compute Engine instances, maintenance is

transparent.

Google

data centers use their live migration technology, providing proactive

infrastructure maintenance. This improves reliability and security. Live

virtual machines are automatically moved to nearby hosts, even while under

extreme load, so that underlying host machines can undergo maintenance. This

means no need to reboot your virtual machines due to host software updates, or

even in the event of some types of hardware failure. Compute Engine instances

are highly scalable. And global load balancing technology enables the

distribution of incoming requests across pools of Compute Engine instances

across multiple regions. The high performance Compute Engine virtual machines

boot quickly and have persistent disk storage. In addition, Compute Engine

instances use Google's private global fiber network with data centers located

worldwide.

CPUs on Demand with Google Compute Engine

Google Compute Engine

has predefined machine types from micro instances to instances with 96 virtual

CPUs and 624 GB of memory. The tier of available configurations include

Standard, High memory, and High CPU. With Compute Engine, you can create

virtual machines with the configuration that's customized for your particular

workloads. Again, from 1 to 64 virtual CPUs and up to 6.5 GB of memory

available for each CPU.

This flexibility means

that you can potentially save money, because you don't have to overcommit to a

hard-sized, basically oversized, virtual machine. No, instead, you can

customize to optimize your virtual CPUs and memory. By offering custom machine

types and predefined machine types, this flexibility means that you can create

infrastructure that is customized for your organization's particular workload

requirements.

Compute Engine is very

scalable and there is no commitment, meaning that you aren't locked in to

whatever configuration you initially choose. So, for example, Compute Engine

offers a start/stop feature that allows you to move your workload to smaller or

larger custom machine type instances or to a predefined machine type

Cloud Storage

Google Cloud Storage

offers a number of different storage and database options. Which you choose

depends on the application, the data type, and the workload profile. Types of

data supported include structured, unstructured, transactional, as well as relational

data. So let's have a look at the different options.

First, Persistent Disk.

This is fully managed block storage suitable for virtual machines and

containers. So for Compute Engine and Kubernetes Engines instances. It's also

recommended for snapshots for data backup. Typical workloads include disks for

virtual machines, read only data sharing across multiple virtual machines, as

well as quick and durable backups of virtual machines.

Google Cloud

Storage is a scalable, fully managed, reliable, and cost efficient

object and blob store. It's recommended for images, pictures, videos, objects,

and blobs, as well as unstructured data. Common workloads include streaming and

storage of multimedia, as well as custom data analytics pipelines, and

archives, backups, and disaster recovery.

Next is Cloud Bigtable. Cloud Bigtable is a

scalable, fully managed, NoSQL wide column database. And this suitable for both

real time access and analytics workloads. Cloud Bigtable is indicated for

low-latency read/write access, high-throughput of analytics as well as native time

series support. Typical workloads include Internet of Things, streaming data,

finance, adtech, which is marketing, as well as monitoring, geospatial datasets

and graphs, and personalization.

Cloud Datastore

is a fully managed NoSQL document database for web and mobile applications.

It's recommended for semi-structured application data, as well as hierarchical

data, and durable key-value data. Typical workloads include user profiles,

product catalogs, for e-commerce, and game state for example.

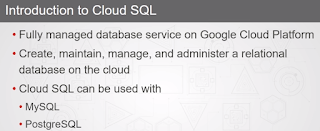

Google Cloud

SQL is a fully managed MySQL, and PostgreSQL, database service. And it's

built on the strength and reliability of Google's infrastructure, of course.

It's recommended for web frameworks, as well as structured data, and online

transaction processing workloads. Common workloads include web sites and blogs,

content management systems and business intelligence applications, ERP, CRM,

and e-commerce applications, as well as geospatial applications.

Google Cloud

Spanner is a mission critical relational database service with strong

transactional consistency at global scale. It's recommended for mission

critical applications, as well as high throughput transactions, and for scale

and consistency requirements. Typical work loads include adtech and financial

services as well as global supply chain and retail.

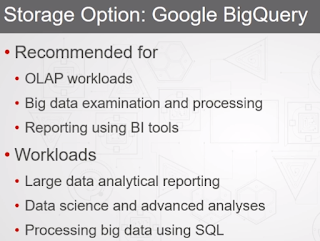

Google BigQuery

is a scalable, fully managed enterprise data warehouse with SQL and very fast

response times. Consequently it's recommended for OLAP workloads, big data,

examination, investigation processing, as well as reporting using business

intelligence tools. Typical workloads include large data analytical reporting,

data science, and advanced analyses, as well as processing big data using SQL.

And Google Drive.

This is a collaborative space for storing, sharing and editing files, including

Google Docs. It's recommended for interaction with docs and files by end users,

collaboration, as well as file synchronization between cloud and local devices.

Typical workloads include global file access via web, apps, and sync clients,

coworker collaboration on documents, as well as backing up photos and other

forms of media.

Google Cloud Storage

options include a separate line for mobile. And, for example, we have cloud

storage for Firebase, which is a mobile and web access service to could storage

with serverless third-party authentication and authorization. Firebase

Realtime Database is a realtime NoSQL JSON database for web and mobile

applications. Firebase Hosting is production ready web and mobile content

hosting for developers. And Cloud Firestore for Firebase is a NoSQL document

database. Cloud Firestore for Firebase serves to simplify storing, querying,

and syncing data for web apps and mobile apps, especially at global scale.

Google Shell

Google

Cloud Shell is a shell environment for the Google Cloud platform. You can use

it to manage projects and resources. And there's no need to install the Google

Cloud SDK, it comes pre-installed. It can also be used with the gcloud

command-line tool and any other utilities.

The

Cloud Shell is a temporary Compute Engine VM instance. When you activate Cloud

Shell, it provisions a g1-small Compute Engine instance on a per-user,

per-session basis. The environment persists while the session is active. It

also comes with a code editor, which is a beta version based on Orion, the open

source development platform. Can also use Cloud Shell to browse file

directories, view, and edit files.

Cloud

Shell features include command line access. So you access the virtual machine

instance in a terminal window. And it supports opening multiple shell

connections to the same instance. That way you can work in one to, say, start a

web server, and then work in another shell to perform some other operations.

You can also use Cloud Shell to launch tutorials, open the code editor, and

download files, or upload files.

It

comes with 5 gigs of persistent disk storage and it's mounted as the $HOME

directory on that virtual machine instance. And this is on a per-user basis

across multiple projects. With respect to authorization, it's built in for

access to projects and resources that are hosted on Cloud Platform because you

are already logged in to Cloud Platform, it uses those credentials.

So

there's no need for additional authorization. So you have access to platform

resources, but the thing is, what access you have to those resources depends on

the role that's been assigned to the Google Cloud platform user that you've

logged in with. Cloud Shell also comes pre-installed with language support for Java, Go, Python, Node.js, PHP, Ruby, as well as .NET.

Cloud Shell comes with a number of pre-installed tools. So for example, we have

Linux shell interpreters and utilities like bash and sh for your shell

interpreters. And it comes with the standard Debian system utilities. For text

editors you're got emacs, vim and nano.

For

source control, Cloud Shell supports Git and Mercurial. For Google SDKs and

tools, you've got the Google App Engine SDK, the Google Cloud SDK, as well as

gsutil for cloud storage. It also comes pre-installed with some build and

package tools. For example, Gradle, Make, and Maven, as well as npm, nvm, and

pip. Additional tools include the gRPC compiler, the MySQL client, Docker, and

IPython. Cloud Shell also provides a web preview function that allows you to

run web apps on the virtual machine instance and preview them from the Cloud

console. The web applications must listen for HTTP requests on ports within a

range of 8080 to 8084. And those ports are only available to the secure Cloud

Shell proxy service, and that restricts access over HTTPS to your user account

only.

To activate Google

Cloud Shell, you just click on the activate Google Cloud Shell icon in the

toolbar. And that opens underneath the current window, or within the current

window that is.

Now, to list your

config defaults, you just type gcloud config list

gcloud compute instances delete deletes one or more Google Compute Engine

virtual machine instances.

Now I'll just type

clear and press Enter. Now to set any defaults, you use gcloud config set project

So now let's say that

we want to set up my default compute zone. So gcloud config set compute/zone. And

we'll say that I want to set it up as europe-west2

Zone names is

available here https://cloud.google.com/compute/docs/regions-zones/

And you can verify it

using gcloud

config list

Okay, so now let's

clear this by typing C-L-E-A-R and pressing Enter. Now, you can also see what

components that come pre-installed. And you can also see the current Cloud SDK

version you're using as well as the latest available version by typing gcloud components

list.

I am in latest version

but if you like to update the gcloud components update

Using Sudo command

If you have update

Press Y and this may take some time

Shell environment

again supports the standard Debian system utilities, well that means that we

can, for example, create an alias for a command.

So let's type alias c=clear. So now if I

type c, that's like issuing a clear command.

That also means that

you can configure variables and so on by placing those directly in the .profile

file. In the tilde, or dollar sign home directory, and that's where we're at

right now. So if I type pwd, this is my home directory, if I type echo $HOME, again same

thing.

So if I go tail to

list the last ten lines of .profile, you'll see here that I have in my profile

setup some environment variables.

And I've also set up

an alias command here. So in actuality any time that I launch the Shell I

will have that alias set up on this machine, those environment variables will

also be populated with the values that you see there. Okay, so that's Cloud Shell.

Setting Shell and Environmental

Variables

Environmental variables are

variables that are defined for the current shell and are inherited by any child

shells or processes. Environmental variables are used to pass information into

processes that are spawned from the shell.

Shell variables are variables

that are contained exclusively within the shell in which they were set or

defined. They are often used to keep track of ephemeral data, like the current

working directory.

We can

see a list of all of our environmental variables by using the env or printenv commands.

The set command can be used to see the shell variables This is usually a huge list. You

probably want to pipe it into a pager program to deal with the amount of output

easily:

set |

less

We will begin by

defining a shell variable within our current session. This is easy to

accomplish; we only need to specify a name and a value. We'll adhere to the

convention of keeping all caps for the variable name, and set it to a simple

string.

Creating a Cloud Storage Bucket in Cloud Shell

Now we'll create a

cloud storage bucket using the gsutil tool in Google Cloud Shell.

Gsutil is the cloud storage command line tool

Gsutil is a Python

application that allows you to access cloud storage from the command line. You

can use Gsutil to do a number of things with cloud storage buckets. And also

perform object to management tasks like creating and deleting buckets, uploading,

downloading and deleting objects. Listing buckets and objects moving, copying

and renaming objects, as well as editing object and bucket access control

lists. So before we get started, I just want to show that I've logged into my

Google Cloud platform here, and I've already activated Cloud Shell.

You need python 2.7

for this so please verify. Now, this is already installed in Google Cloud

Shell. But if you're using Cloud SDK on your local machine you'll need to

install Python 2.7. So to see if you have gsutil tool installed, type gcloud components

list.

More instructions here

https://cloud.google.com/storage/docs/gsutil

Command to create

bucket is

gsutil mb

gs://mybucket

And you can see

service exception 409 bucket my bucket

already exists. See the message it displays .

And obviously,

somebody else across Cloud storage has already used that. And that's the thing,

the name of your bucket has to be unique across cloud storage. So this one

might work, however. Let's try this one, my bucket, 1258

gsutil mb

gs://mybucket1258

Next I am creating

some fake images and then copying the same to new bucket I created

touch london.png

touch delhi.png

touch apple.jpeg

ls

Command to copy images

to bucket I just created is do one at a time

gsutil cp

london.png gs://mybucket1258

gsutil cp delhi.png

gs://mybucket1258

gsutil cp

apple.jpeg gs://mybucket1258

Now go to GCP -

Storage -Browser - Refresh Bucket and the images are there

Okay, now, it's always

a good idea to clean up after yourself, right? You don't want to incur any

charges if you don't have to. So to do so what we can do is, we can use this

command, right?

Gsutil rb

for remove bucket or gsutil rm -r to remove the bucket and anything underneath it. So

let's copy these commands. In order to use rb for remove bucket, it must

be empty. However, this command, gsutil rm -r will remove all the contents and

the bucket.

gsutil rm

gs://mybucket1258/*

gsutil rb

gs://mybucket1258/

First command will

delete the contents in bucket

Second Command deletes

the bucket

and we'll see now that

we've got no buckets. So we successfully cleaned up after ourselves.

Data Analysis with GCP

Modern

applications typically generate large amounts of data from many different

sources. Devices or sensors can capture raw data in unprecedented volumes, and

this data is perfect for analysis. This analysis can provide insight into an

organization's operating environment and business.

BigQuery

is a serverless (In the context of a data warehouse, serverless means being

able to simply store and query your data, without having to purchase, rent,

provision or manage any infrastructure or software licensing.) highly scalable

enterprise data warehouse on Google Cloud Platform. It's fully managed, so no

infrastructure to manage, eliminating the need for a database administrator. So

an organization can focus on analyzing the data using SQL. It enables real-time

capture and analysis of data by creating a logical data warehouse. And with

BigQuery, you can set up your data warehouse in minutes and start querying

against huge amounts of data.

Cloud Dataflow

is a unified programming model and managed service from Google Cloud Platform

using Apache Beam SDK. Apache Beam SDK supports powerful operations that

resemble MapReduce operations, powerful data windowing and verifying

correctness control against streaming or batch data. It's used for a wide range

of data processing patterns, including against streaming and batch computations

and analytics as well as extract, transform, load operations. [or ETL.] Since it's fully managed, it

lets you focus on operational tasks like capacity planning, resource

management, and performance optimization.

Cloud Dataproc

is a fully managed and fast cloud service that is used to run Apache Spark and

Hadoop clusters. You can take advantage of Apache big data ecosystem using

tools, libraries, and documentation for Spark, Hive, Hadoop, and even Pig.

Features include the fact that it's fully managed, and that means that [automated cluster management.] you've

got a managed deployment, logging, as well as monitoring. This means an

organization can focus on the data not the cluster, and clusters are scalable,

speedy, and robust.

With Cloud

Dataproc, clusters can also utilize Google Cloud Platform's flexible virtual

machine infrastructure, including custom machine types, as well as preemptible

virtual machines. So this provides for perfect sizing and scaling. Since it's

on Google Cloud Platform, Cloud Dataproc integrates with several other Cloud

Platform services like Cloud Storage, BigQuery, BigTable, Stackdriver Logging

and Monitoring. And this results in a comprehensive powerful data platform.

Versioning lets you switch versions of big data tools like Spark, Hadoop, as

well as others. And you can operate clusters with multiple master nodes and set

jobs to restart on failure, ensuring high availability.

Google's Cloud

SQL is a fully managed database service that simplifies the creation,

maintenance, management, and administration of relational databases on Cloud

Platform. Cloud SQL works with MySQL or PostgreSQL databases. Cloud SQL offers

fully managed MySQL Community Edition database instances in the cloud, and

these are available in the US, EU, or Asia. Cloud SQL supports both first and

second generation MySQL instances. And data encryption is provided on Google's

internal networks, as well as for databases, temporary files, and backups. You

can use the Cloud Platform Console to create and manage instances. The service

also supports instance cloning.

Cloud SQL for MySQL

also supports secure external connections using Cloud SQL Proxy or SSL. It also

provides automatic failover for data replication between multiple zones. And

you can import and export databases either with mysqldump or you can import and

export CSV files. Cloud SQL for MySQL offers on-demand back ups and automated

point-in-time recovery, as well as Stackdriver integration for logging and

monitoring.

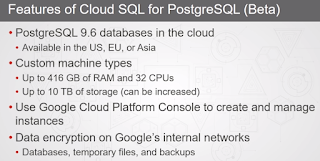

Cloud SQL for

PostgreSQL is a Beta service from Google supporting PostgreSQL 9.6 database

instances in the cloud, and these, again, are available in the US, EU, or Asia.

It supports custom machine types and up to 416 gigs of RAM and 32 CPUs as well

as up to 10 terabytes of storage, and that can also be increased if required.

Similar to Cloud SQL for MySQL, you can use Cloud Platform Console to create

and manage instances. And data encryption is provided on Google's internal

networks, and for databases, temporary files, and backups.

Cloud SQL for

PostgreSQL supports secure external connections using the Cloud SQL Proxy or

SSL. You can use SQL dump to import and export databases. And Cloud SQL offers

PostgreSQL client-server protocol and standard PostgreSQL connectors support.

Cloud SQL for PostgreSQL offers on-demand and automated backups, as well as

Stackdriver integration for logging and monitoring.

Creating a MySQL Database with Cloud SQL

I'm logged into my Google Cloud Platform

account. I'm going to click on burger menu and I'm going to scroll down.

And I'll click on SQL under Storage, so here I am on the Cloud SQL page, and

I'm going to click Create Instance. [

Choose a database engine - MySQL Version 5.6 or 5.7

or PostgreSQL BETA Version 9.6.] I'm going to leave MySQL selected,

and I'll click Next. [In the next page,

there are two types of Cloud SQL MySQL instances. There is Learn more

hyperlinked text and: MySQL Second Generation (Recommended) and MySQL First

Generation (Legacy)] And I'm going to click Choose Second

Generation, [In the Create a MySQL Second

Generation instance page, there is an Instance ID field, Root password field,

or a checkbox for No password, Location: Region, and Zone dropdowns and a

Create button and an option to Show configuration options.] and now

we'll enter the instance information. So we'll call this annmj. [

You can

leave the rest of the configuration at the defaults. But you can also click to

show more configuration options. And you can drill right down into labels,

maintenance schedule, database flags, authorize networks, and so on. But we'll

hide those configuration options, we don't need those. And we'll click on

Create. Now it will return us to the Instances page, and you'll see now that

it's initializing the instance. Okay, so our instance has now initialized

and started. And we can connect to it using the MySQL client in Cloud Shell.

We have

two choices. We can either start or activate a Cloud Shell manually. [# you can either manually activate Google Cloud

Shell by clicking # the icon in the upper right corner of the 'tool bar'

] And optionally set some default configurations. [# OPTIONAL: to set any defaults use 'getcloud config

set' gcloud config set project gcde-2019-197823 gcloud config set compute/zone

us-central1-a] Or we can connect to it using the Instance details

page.

To do

that, click on the instance, and on the instance details page, scroll

down. And we'll click Connect using Cloud Shell. [This is under the Connect to this instance tile which displays the

instance IPv4 address and the Instance connection name. CloudShell displays at

the bottom of the screen.]

Okay, so

the connection string was populated in Cloud Shell automatically. so, we'll just press Enter to connect as

root. [The message displays: Whitelisting

your IP for incoming connection for 5 minutes.] And this is where

we're at, we're going to enter the root password. Now you just have to wait a

couple of minutes until it's done white listing the IP for this connection. And

you saw that notification saying done. So it made a modification to the instance,

white listing the IP for this connection, and now we enter the password. So

click into Shell, and enter the password that you used. And press Enter. [When he enters the password, a message displays:

Welcome to the MariaDB monitor. Commands end with ;

or /g.] So now we're at the MySQL prompt. So, first what we'll do

is we'll create a database called blog. So I'll highlight this command.

CREATE

DATABASE blog;

And we can

see that it was successful. Now, we'll insert some sample blog data into our

new database. So first, what we have to do, so we have to specify to use blog.

Then we create the table blogs and it's got a couple of columns. Blogger name,

content, and an entryID, and that's the primary key,

Use

blog; CREATE TABLE blogs (bloggerName VARCHAR (255), content VARCHAR (255),

entryID INT NOT NULL AUTO_INCREMENT, PRIMARY KEY (entryID));

INSERT

INTO blogs (bloggerName, content) values ("Leicester", "this is

MY first blog");

INSERT

INTO blogs (bloggerName, content) values ("London", "this is MY

first blog");

INSERT

INTO blogs (bloggerName, content) values ("Scotland", "this is

MY first blog");

Okay, so

we should have some data. So let's type select * from blogs; don't forget

the semi-colon. Press Enter, and there you see is our data.

Now to

exit you just type exit, press Enter, and it's always a good idea to clean up

after yourself. So what I'm going to do is I'm going to close the Cloud Shell.

I'm going to go back to our Instance. Now we'll scroll up to the top of the

Instance details page. Click the ellipsis, and we'll select, Delete. Now we

have to type the instance name.

Creating a PostgreSQL Database with Cloud SQL

Okay, our PostgreSQL

instance has initialize and started. And now at this point, we can connect to

it using the PSQL Client in Cloud Shell. We

have a couple of options. We can either manually activate a Google Cloud Shell,

and we can optionally specify some configuration settings. Or we can connect

directly to it by clicking Connect using Cloud Shell from the Instance details

page. This is the easiest way, so let's go ahead and do this.

When you see your

instance name and mypsqlinstance --user=postgres --quiet click enter and enter

the password.

CREATE DATABASE

blog;

\connect blog; - Enter password

CREATE TABLE blogs

(bloggerName VARCHAR (255), content VARCHAR (255), entryID SERIAL PRIMARY KEY)

;

\d+ table_name to find

the information on columns of a table.

\d+ blogs

INSERT INTO blogs

(bloggername, content) values ('Joe', 'this is my first blog');

INSERT INTO blogs

(bloggername, content) values ('Wade', 'this is my first blog');

INSERT INTO blogs

(bloggername, content) values ('Bill', 'this is my first blog');

select * from

blogs;

Now to quit from the

PSQL prompt, just press \q, press Enter.

I was new to

PostgreSQL so visited below site for basics.

No comments:

Post a Comment